After skipping it last year (I did NaNoWriMo instead) I decided that I missed doing National Novel Generating Month and thought I'd do something relatively simple, based on Tom Phillips' A Humument, which I recently read for the first time. Phillips' project was created by drawing over the pages of the forgotten Victorian novel A Human Document, leaving behind a handful of words on each page which form their own narrative, revealing a latent story in the original text.

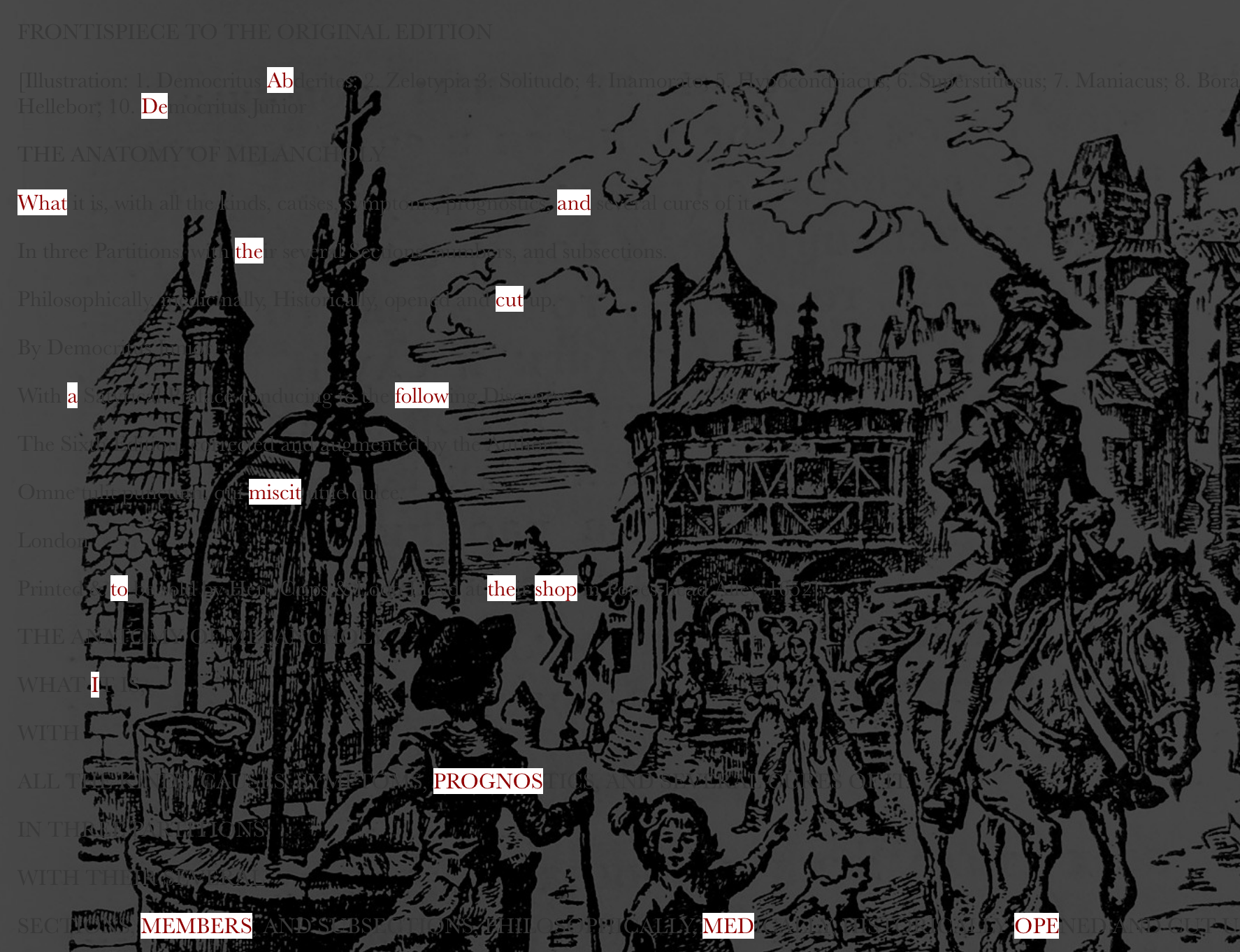

I wanted to simulate this process by taking a neural net trained on one text and use it to excavate a slice from a second text which would somehow preserve the style of the RNN. To get to the target length of 50,000 words, the second text would have to be very long, so I picked Robert Burton's The Anatomy of Melancholy, which is over half a million words, and one of my favourite books. It's a seventeeth-century treatise on the diagnosis and cure of melancholy but also a great work of Elizabethan prose, and it's already a Frankenstein's monster, a great baggy patchwork of all the books its author had consulted. Burton himself refers to it as a cento, a poem constructed from the lines of other poems, so using it seemed to fit the spirit of both the exercise and the book itself.

I trained the RNN on Dumas' The Three Musketeers, for no particular reason, other than I had started reading it earlier this year and then gotten distracted, and because it felt like it would have its own distinct flavour. Luckily, it's the sort of text that's easy for an RNN to imitate.

This post goes into a bit of detail on the algorithm and fine-tuning the results: you can go straight to the full web version here: I PROGNOS MEMBERS

An RNN with constraints

I've used J C Johnson's torch-rnn for all of my text-RNN projects but had never really modified it before this. Generating text is a matter of running the neural net, which outputs one character after another as a weighted set of probabilities, one for each of its set of tokens (every character which was in the training corpus). I forked the original sampling script and added a hook which could modify the weights as they came out of the RNN, setting the probability for letters which I didn't want to zero.

To test this, I got the RNN to generate lipograms - for earlier experiments on these lines, see this post - by suppressing the same characters for an entire run. Taking all of the e and Es out of Dumas yielded results which were so good that I spun them off into a side project: Athos & Porthos & Aramis & d'AtGuy:

“That is to say, you may rid you to try and said that this is an approach, mad, monstrous! This is that of this loving man.”

“That is truly, that is truly, monstrous agitation?”

“It is that you would blow my own sword.”

“What do you say?”

“I say that it is not that which I having thrown your hors but my honor to bring him I am running about it. It is not that that which I should bring that day and his companions and that housing many distant. I will announcing that which I having him a fortnight, and that you arriving at that month of this som of any in this man.”

“That is truly,” said thought, “a sort of bravo, and I will turn him to think of your loving.”

Because the RNN uses the LSTM (long short-term memory) design, fooling with its output in these minor ways doesn't really disturb its sense of grammar.

Another way to tweak the output was to constrain only the first letter of each word: for example, here is the neural net which powers @GLOSSATORY but forced to start every word with 'b':

BEAU: bee believed by bony beef

BACTERIA BONY: bottled butterfly but bony bacteria based by beating bottles between bronchial birds by blowing birds

BURDEN BILE: butter blended by black barlels but black basilisks

BLADDER BLUE: black bearing brownish burrowing birds bearing branches back

BIRCH: blow-bearing birds bearing bright blue berries blended by bier bats

BROAD BAT: barley band bearing brownish bark but black beetles

Vocabularies

The next step was to use this to implement the excavate algorithm, which works like this:

- read a vocab from the next L words from the primary text (Burton) where L is the lookahead parameter

- take the first letter of every word in the vocab and turn it into a constraint

- run the RNN with that constraint to get the next character C

- prune the vocab to those words with the first letter C, with that letter removed

- turn the new vocab into a new constraint and go back to 3

- once we've finished a word, add it to the results

- skip ahead to the word we picked, and read more words from the text until we have L words

- go back to 2 unless we've run out of original text, or reached the target word count

Here's an example of how the RNN generates a single word with L set to 100:

Vocab 1: "prime cause of my disease. Or as he did, of whom Felix Plater speaks, that thought he had some of Aristophanes' frogs in his belly, still crying Breec, okex, coax, coax, oop, oop, and for that cause studied physic seven years, and travelled over most part of Europe to ease himself. To do myself good I turned over such physicians as our libraries would afford, or my private friends impart, and have taken this pains. And why not? Cardan professeth he wrote his book, De Consolatione after his son's death, to comfort himself; so did Tully"

RNN: s

Vocab 2: "peaks ome till tudied even uch on's o"

RNN: t

Vocab 3: "ill udied"

RNN: u

Final result: studied

The algorithm then restarts with a new 100-word vocabulary starting at "physic seven years"

It works pretty well with a high enough lookahead value, although I'm not happy with how the algorithm decides when to end a word. The weight table always gets a list of all the punctuation symbols and a space, which means that the RNN can always bail out of a word half-way if it decides to. I tried constraining it so that it always finished a word once it had narrowed down the options to a single-word vocab, but when I did this, it somehow removed the patterns of punctuation and line-breaks - for example, the way the Three Musketeers RNN emits dialogue in quotation marks - and this was a quality of the RNN I wanted to preserve. I think a little more work could improve this.

Technical hitches

As usual, more than half of the work was fixing incidental issues:

- The first version of the algorithm assumed that Lua would just handle Unicode strings, which it doesn't: this is why the first version of Athos & Porthos & Aramis & d'AtGuy keeps forgetting d'Artagnan's name.

- I wanted a HTML version which imitated A Humument, with the original text obscured except for the excavated words. Getting this working meant I had to pass in an index with each vocab word that I could match up when post-processing at the end.

- The version of Lua which Torch7 runs on has a hard 1GB RAM limit, and once I'd converted the whole of The Anatomy of Melancholy to a JSON document, I hit that, so I had to rework things so that it read the vocabulary in a line at a time, which is really what I should have done in the first place.

Lua is an interesting and fun language: it's high-level and dynamic, but ultra-light-weight and fast, and had me thinking like a C programmer for the first time in decades, which was not an experience I ever expected to enjoy.

Also, I used coroutines for the first time. Coroutines are pretty good for stringing together pipelines of small, simple functions who feed their output to the next: from a text file, to a vocabulary builder, to a weight tuner, to an RNN sampler.

Tuning

Once I'd sorted all that out, I had to tune the lookahead value so that the algorithm could get 50,000 words of hybrid Dumas/Burton. The problem here was that high values of L give far more coherent results, but would race through the available vocabulary in Burton and run out before they reach the word count. Here are some samples at increasingly lower values:

L=1000

“Is it not that you will not finish the camp,” repeat he to him as if to see if they have seen in the common chamber.

“The dead will then temper,” said Anger holy strange pity, “the sat which I am a moth carper and play any other wants.”

“What do you mean to say that which I have not the left of his hope,” sai trust seeing her and play and repent as the down that is proceed.

L=500

“Who ar thinking show you have an idly to make me and find head of the provinces of our desp-to stead.”

“The dear glu live in the casu on you, I will and the cart with him who had not to do with the able to her husband.”

L=100

“Some of the carin?”

“I had a this cause of the company of the cart of the came of the far of the co low are the call and the bare to the ceremoni of the curious see, and way of composed of the watch.”

“Democrit! yet,” said de la S. At fire, and the service of the could of the thing he have said, “This inter tramp of the consume out ow me.”

L=50

“And not to the could of the stands of the call and the sea, may barr.”

“Sall habe, the cities, world?”

“We send a great loit.”

“Holland he in the price of things?”

“I as in more the drunken and reform of the countries.”

L=10

“Hi mort I a cresce?” de following, “P. A, A because which is to by of though have an an in this an and of this may be a stand and when I which my the gave of have an since mine.”

“He said well as a my here, to out of his pay in a paid, an any of my of to my life be as to be a some the said.”

It turned out that L=20 was about as high as I could go and still get to the word target. The quality was not what I'd hoped for based on my original experiments, but it was still ok. And skimming to the end - to make sure that the algorithm really hadn't run out of primary text before getting to 50,000 characters - led to a really pleasant surprise.

Synthetic macaronics

Based on my previous NaNoGenMo attempts, I was used to results that were the same all the way through. But The Anatomy Of Melancholy is an early modern text whose author would have preferred to be writing in Latin: he spends a couple of pages of the preface complaining that publishers will only take English books these days because they're too greedy. (Have I mentioned that I love this guy?) The entire text is studded with Latin maxims, as you can see from the examples above, but the endnotes, which the excavate algorithm dutifully chewed its way through, are almost entirely Latin. So the final "chapters" of I PROGNOS MEMBERS (the title of the final version) are in their own style, a wonderful patois of Latin vocabulary being jammed into the sentence structures of the Gutenberg's English translation of Dumas. It's reminiscent of dog Latin or macaronics, the learned or schoolboyish game of mashing together vernacular and classical forms and vocabularies, a form of wordplay which Burton would certainly have recognised.

“Cap hos--a man tempor-.”

“Of which al A de lib cap in feri a ling cap, a lib. To morbi--”

“Man a ga--” rebu in P-Socrate or triumph. A sic obscuratur flor, a serenit it curae occupat transit hom a sit ampli-me. Cad, a loco destituto miserabilit immerg! Hu omn ignoras om hurn i lib.”

“Dist in tus violent hir.”

“Hildes, it ad ha!” conduc of Anima, “I c. Sp an of a cut divisio and templu cap a move or si. De lib.

Tu qua pag tame tu cap in c vit a more an in the c-l c-M. Ve an ance in l. Crus--sequant immorta abode of his c-rap. Besides, the body with with a dog with a prius fuerat alter, a libera civilibu-sed a contend to de Sen o Pessi in an in a sit de Cap de Cap de Cap Par. Co do tu hoc, a memorand cap cap--fol, a sit, I sun in a cap pra-s in tract conscientia to tract contradic--t is ob, in postum humid in a controver cap sect, tract a species fur hic hi aliquan evadi--a cap man hic in a has in a species in consult to tract a polit cap. A l. Prim--man, sin unde in sump causas in a substern tan.

“Ga, sub.”

I didn't discover this till I was about to publish it, and it made the whole exercise worthwhile.

Here is the final web version:

I've added a description of how to run the code, including the pre- and post-processing scripts, to the README.md file of the Excavate repo.

Source code

Future prospects

This kind of hybridisation can be applied to any RNN and base text, so there's a lot of scope for exploration here, of grafting the grammar and style of one text onto the words from another.

And the alliteration and lipogram experiments above are just two simple examples of more general ways in which I'll be able to tamper with the output of RNNs.

For comparison, here's the output of the inverse process, an RNN trained on The Anatomy of Melancholy run with the vocabulary of The Three Musketeers:

He that is a man is believe the same of the bold, and then as a companion of the bore of melancholy and memory, as the said [St. Ara and [St, Ara, D. Che St. Austria, the Pope of the Louv. But this is the preceding of the said [S. Mous's mild. [S]met. car. v. v.) "As this mad men arm as much as in the heard of a complexion, a mons-comfort, a thing in the body, and the find our time in the mean time they are in the mouse of it." The said She. St. All at la to the present spur our commencing of the believe and profit, and then as [She, "will have almost mad and discuss and precaution to the heart, and the life of the some of them all, and then to the bring and dreaming of the bones, and then she contradict it, and then again to be in a wife, a complacent, a servant, a half-how, a diamond, a door an order, a profound bowed to the sea, and in the head and sound, and the lift of love matters, and disappear in the money which we can do as murmured man should be a proper part of the body, they have been consent, and the life of the borrow of the sound of men, and then again to be a man to be use of it. A thing is treasure and sorry for a common to be consent. To this purpose which is the said [Sam. in his discreet metal-men of old, and the resum to the consequent part of the considerable part. [She [Germain in his travers is a companion of the mind in the said of old, to another of a stand before the strange contrary of the stars.