So I have opinions about the Google engineer who thinks that his chatbot is sentient, because of course I have. One of the things about people who work with computers is that we have opinions about AI which are not necessarily grounded in anything real about either intelligence or computers, but have their roots in a bunch of emotional factors which we don't talk about too much.

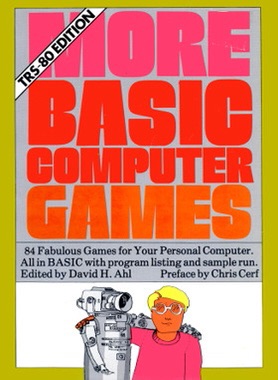

This is the sort of thing I grew up with and when I showed this image to my son a couple of months ago, his reaction was "OMG they're dating". If I look back on the intensity with which I believed in the possibility of AI as a teenager, part of it was wanting to agree with Douglas Hofstadter, but a lot of it was an unspoken but acutely felt idea that a sentient computer would be an ideal friend: just as interested in abstract nerdy shit as I was, not as likely to want to get into a physical fight as most of the real humans seemed to be.

One of the real ethical dilemmas of AI is separating fact from science fiction when writing about it. The myth of AI—the way in which HAL-9000, C-3PO and their descendants are part of popular culture, as well as the specific, extremely detailed but equally imaginary ways in which the ideas have been developed in science fiction and transhumanism—is really powerful, and I think we're entering a confusing time in which our collective understanding of what's really going on in the field are going to be distored or obscured by what we already believe about it.