I was tinkering around trying to get Justin Johnson's neural-style implementation working on the HPC - this is an algorithm which uses a deep learning image recognition net to extract low-level style features from one image and use them to render the large-scale contours of another. This technique has been commercialised into apps like Prisma.

Getting Torch to compile the dependencies hit a dead end, and then I noticed that the code used a Caffe model loader, so why not look for a Caffe implementation, as I already had that working? I found this, which worked just fine.

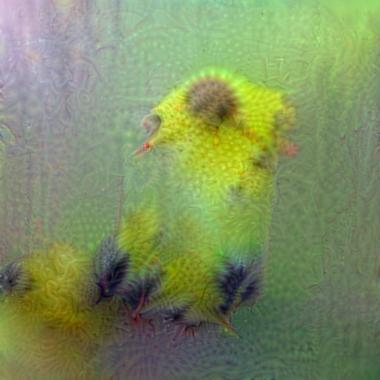

But then, I realised that I could use the Visual Geometry Group's neural nets which these run on to run the same deepdraw technique which I used for my 2015 NaNoGenMo project, neuralgae. The results are lovely - this net produces much more colourful and detail-oriented images, and if you push the parameters it gets trippy but remains still coherent, like something from the Codex Seraphinianus.

The neuralgae Twitter bot is running on this net now.