Setting aside the question of whether LaMDA is sentient, or whether the Turing Test is valid, or whether general AI is possible or likely—there are a lot very strongly held opinions on the matter out there if you want to go looking for them—I've been thinking about what text generators are for.

Around five years ago I started playing with RNNs but although I've tagged a lot of those posts 'ai' here, and like to anthropomorphise the bots I've based on them, they're obviously not AI, and their relationship to writing is a bit vexed. A former colleague of mine read this blog and referred to what I do as "digital poetics" which seems like an impressive turn of phrase for something which feels much more like hacking, fucking around with texts and code to provoke and amuse. A neural-net-fuelled text bot, in this light, is not so much a poetic machine as an automated shitpost generator, although sometimes Glossatory's output has a kind of weird beauty.

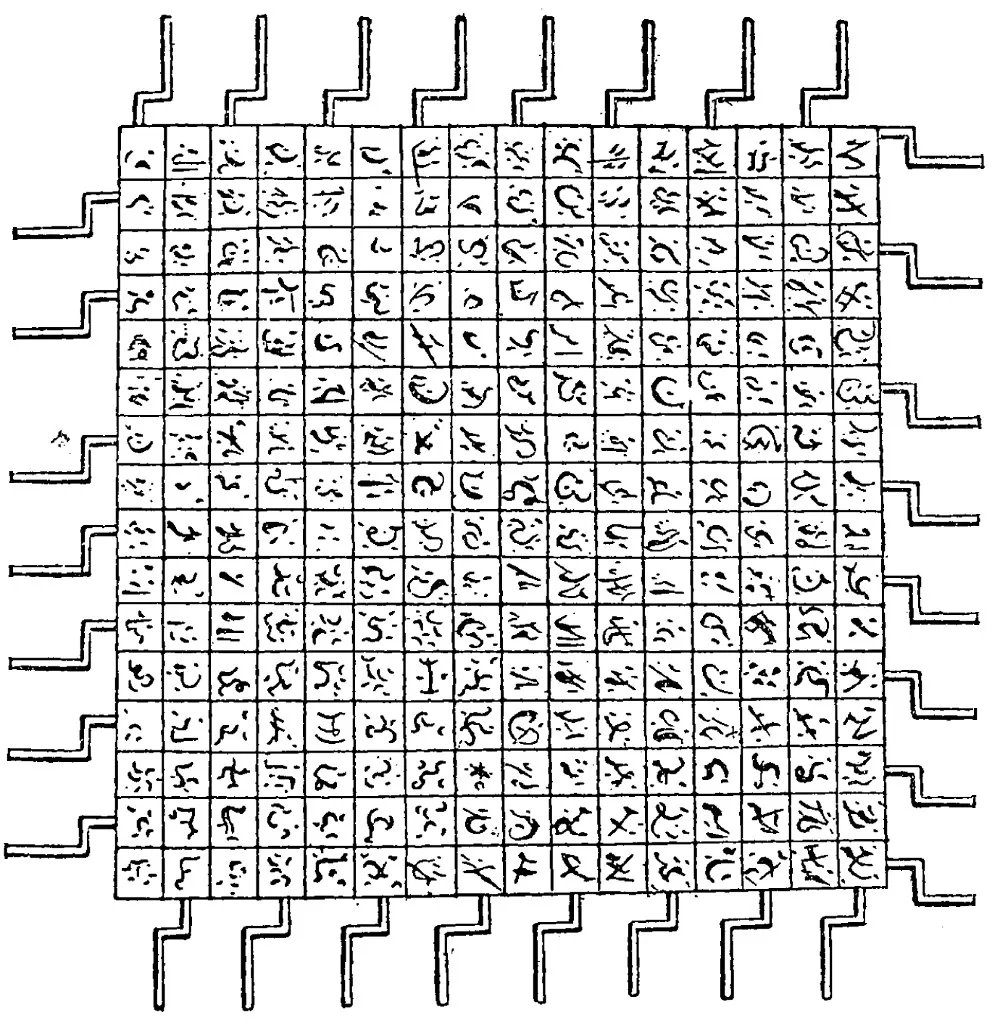

The non-digital realm of creative practice these things link back to is OuLiPo, even if they're not copying the most famous forms of lipogrammatic constraint from that tradition. I think any procedurally-generated text is in line with the oulipian turn away from writing as some sort of expression of an otherwise inchoate force arising from the individual, towards a model which is ludic or even oracular. Tossing the yarrow sticks, casting stones, twirling the wheels on the side of the Laputan word-engine.

But what does Google want with all this? What's the use of a piece of software which can generate superficially plausible but, ultimately, content-free text? The current generation of text simulators are so fluent at first that one imagines them being used as a chat interface, maybe providing a human-seeming interface to a set of simple menus: but we can do that already with recorded prompts.

About six months ago I saw a blog post titled "We've Been Doing it the Wrong Way" on the IT forum Lobsters. The post was long and waffly, purporting to be the wisdom of a tech veteran but without any real examples or genuine content. For some reason it nagged at me for a couple of weeks, and when I returned to it, I convinced myself that it had been generated using GPT-3 or something similar. This seemed a more charitable explanation than the alternative, which was that someone had sat down and written it deliberately. What really stuck with me was revisiting the thread of comments on the article: it's a typical Lobsters thread, bickering around rather than about the topic, and none of the comments mention what was to me the most salient feature of the original post, which was that it didn't actually mean or say anything.

Baked into the Turing Test, and into most discussions of the act of writing, is that it's an attempt at communication between one person and one or more other people. But there are plenty of other reasons to produce texts. I didn't decide to participate in blogjune because I thought that I had thirty great ideas for posts: I did it to get back into the habit of writing in a way which is different to writing in a journal. I like regular blogging and I wanted something which would spur me into doing it. That doesn't meant that these posts aren't a sincere attempt to communicate something, but that's not all that they are.

Other reasons to write: because you have been set an assignment, because you are applying for a job, because it's part of your job to generate content, a deck of slides or a blog post or an article or a report. A while after I'd readthat waffly IT post I started to notice ads for AI-powered services to do just that, generate blog posts on demand, and for years an awful lot of content on the internet consists of insincere attempts to provide content which will be harvested by Google, regardless of their intelligibility or usefulness to a potential reader.

At a smaller scale, word processors and services such as Grammarly have been acting as prosthetics to the act of writing for years. When I add image descriptions to the drawings I post to @GLOSSATORY I usually have to work against my phone's autocorrect when I type in the text prompt, an example of one AI struggling to normalise the output of another.

I can get into a strange and unhealthy mood pondering this—it's a bit too close to Dead Internet Theory for my liking. Perhaps it's because of my habit of playing with these things that I see in Google's work nothing more that what I might do if I had the resources: train an even better neural network on every text in the world just to see what kind of shitposts it can come up with.

And there's an Oulipian side of me that wants to imagine that GPT-3's descendants will actually be useful, that we can use sheer computing power to shortcut all the old-fashioned, earlier approaches to AI systems which know stuff and can tell you about it, the way that we've done it already for vision and translation.

Clichés are the armature of the Absolute

—Alfred Jarry